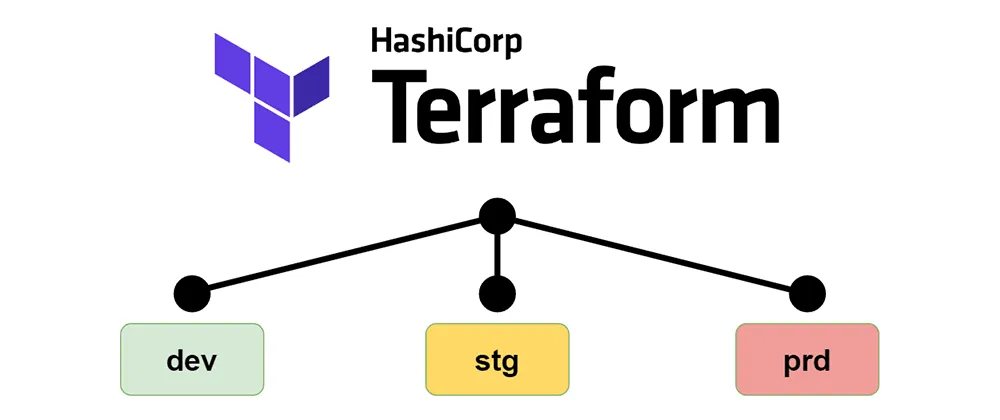

Infrastructure as Code with terraform for multiple environments

Working with Infrastructure as Code (IaC) is incredible. Figuring out the correct repository structure, including a CI/CD pipeline, is not so simple.

Here are my two cents on the subject.

Motivation

- Using git to audit changes and revert to the previous state

- Promoting environments with git’s pull-requests (dev–>stg–>prd)

- Comfortable maintenance of variables per environment

Offered Structure

To examine a live example visit unfor19/terraform-multienv. This repository can also be used as a template.

.

├── .github

│ └── workflows

│ ├── terraform-apply.yml

│ └── terraform-plan.yml

├── cloudformation

│ └── cfn-tfbackend.yml

├── live

│ ├── backend.tf.tpl

│ ├── main.tf

│ ├── providers.tf

│ └── variables.tf

└── scripts

├── prepare-backend.sh

├── prepare-files-folders.sh

└── terraform.sh

Assumptions

- Branches names are aligned with environments names, for example

dev,stgandprd - The CI/CD tool supports the variable

${BRANCH_NAME}, for example${DRONE_BRANCH} The directory

./livecontains infrastructure-as-code files -*.tf,*.tpl,*.json- Multiple Environments

- All environments are maintained in the same git repository

- Hosting environments in different AWS account is supported (and recommended)

- Variables

- ${app_name} =

your-app-name - ${environment} =

devorstgorprd

- ${app_name} =

Remote Backend

Creating The Resources

This can be done manually in the AWS Console or by using a terraform module, such as cloudposse/terraform-aws-tfstate-backend.

Initially, I used the terraform module, but then I found out I’m stuck in the “chicken and the egg” situation. I’m using terraform to create resources that will save the state of those resources; what?

I found it easier to do this task with CloudFormation, which makes it totally a separate process, and it is done once per environment.

I’ve created the script scripts/prepare-backend.sh, which creates an S3 bucket for the state file and DynamoDB table for state lock. The script uses aws-cli and deploys the cloudformation/cfn-tfbackend.yml template.

NOTE: Remote Backend resources are created per environment (branch).

Configuration Per Environment

One of the major pain points of this configuration is setting the Terraform Remote Backend per environment. Each environment must have its own “backend settings”, and the values must be hard-coded since you can’t use variables in the terraform backend code block.

Only one backend may be specified, and the configuration may not contain interpolations. Terraform will validate this. Source

This part was solved by creating a template, which gets modified before executing terraform apply.

terraform {

backend "s3" {

region = "AWS_REGION"

bucket = "APP_NAME-state-ENVIRONMENT"

key = "terraform.tfstate"

dynamodb_table = "APP_NAME-state-lock-ENVIRONMENT"

encrypt = false

}

}

The script that applies the modification is scripts/prepare-files-folders.sh. It’s using a simple sed command to find and replace AWS_REGION, APP_NAME and ENVIRONMENT. After applying these changes, the file backend.tf.tpl is renamed to backend.tf, making it available to the terraform CLI due to its tf extension.

This script prepare-files-folders.sh has a bigger purpose, and we’ll dive into it in a few moments.

Environment Per Branch

One Dir To Rule Them All

The goal is to have a single directory that contains all of the IaC files (tf, JSON, and so on) and then execute terraform apply from this directory. And again, scripts/prepare-files-folders.sh comes to the rescue.

meirgabay@~/terraform-multienv (dev)$ ./scripts/prepare-files-folders.sh

[LOG] Prepared files and folders for the environment - dev

total 48

drwxr-xr-x 8 meirgabay staff 256B Dec 9 23:06 .

drwxr-xr-x 19 meirgabay staff 608B Dec 9 23:06 ..

-rw-r--r-- 1 meirgabay staff 229B Dec 9 23:06 backend.tf

-rw-r--r-- 1 meirgabay staff 775B Dec 9 23:06 main.tf

-rw-r--r-- 1 meirgabay staff 72B Dec 9 23:06 outputs.tf

-rw-r--r-- 1 meirgabay staff 41B Dec 9 23:06 providers.tf

-rw-r--r-- 1 meirgabay staff 1.2K Dec 9 23:06 variables.tf

terraform {

backend "s3" {

region = "eu-west-1"

bucket = "tfmultienv-state-dev"

key = "terraform.tfstate"

dynamodb_table = "tfmultienv-state-lock-dev"

encrypt = false

}

}

A new directory dev was created in the project’s root directory, and it contains the files that are needed to deploy the infrastructure. The example above is for dev, but the result would be the same for stg and prd; the only thing that really changes is the Remote Backend configuration.

Variables Per Environment

This is where variables and local values come into play.

The variable BRANCH_NAME is assigned to environment, according to the branch that will be deployed with terraform apply. The CI/CD process sets the value of BRANCH_NAME (we’ll get to that).

BRANCH_NAME=dev

$ (dev) terraform --apply -var environment="$BRANCH_NAME"

variable "environment" {

type = string

description = "dev, stg, prd"

}

variable "cidr_ab" {

type = map

default = {

dev = "10.1"

stg = "10.2"

prd = "10.3"

}

}

locals {

vpc_cidr = "${lookup(var.cidr_ab, var.environment)}.0.0/16"

}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

cidr = local.vpc_cidr

# omitted arguments for brevity

}

NOTE: I prefer using local values in my resources and modules instead of variables. I even wrote a blog post about it. It’s not mandatory, but I consider it as best practice.

CI/CD

This part is fully covered in the README of terraform-multienv. This is an abstract of the whole process.

Deploying the infrastructure - Commit and push changes to your repository

git checkout dev git add . git commit -m "deploy dev" git push --set-upstream origin dev- CI/CD service (GitHub Actions in my case) is triggered

- On pull-request to

stgorprd, trigger terraform-plan.yml - On push to

dev,stg,prd, trigger terraform-apply.yml

- On pull-request to

- Promote

devenvironment tostg- Create a PR from

devtostg - The plan to

stgis added as a comment by the terraform-plan pipeline, here’s an example - Merge the changes to

stg, and check the terraform-apply pipeline in the Actions tab

- Create a PR from

Alternatives

- Terraform Cloud - Integration to git repository, the “environment” is referred as Workspace

- terragrunt - Provides extra tools for keeping your configurations DRY, working with multiple Terraform modules, and managing remote state

References

Final Words

You might have noticed I didn’t mention anything about scripts/terraform.sh. Since you’ve got this far, you deserve to know what it does

terraform initterraform plan- save the plan to a markdown fileplan.mdterraform apply- run only if the plan contains changes

I hope you find this useful, and if you do, don’t forget to clap/heart and share it with your friends and colleagues. Got any questions or doubts? Let’s start a discussion! Feel free to comment below.

Originally published at meirg.co.il on December 10, 2020